Thecus N5550 Network Attached Storage Review

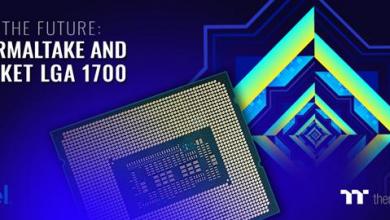

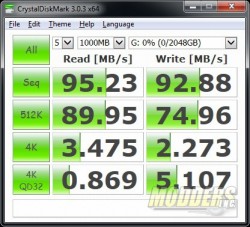

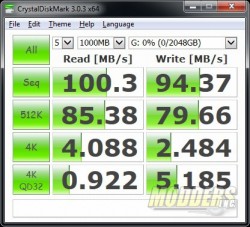

As you can see from the chart, the hard drive is limiting performance by a small margin. The SSD in the N5550 is not much faster and are well within the 3-5% variation we expect when testing. Very rarely are you going to get the exact same result every time. This is why we run 3 test per run then average them to get the final result. Based on the information above, you can see that we are at the limit of the data processing and transfer ability of the Atom processor.

As you can see from the chart, the hard drive is limiting performance by a small margin. The SSD in the N5550 is not much faster and are well within the 3-5% variation we expect when testing. Very rarely are you going to get the exact same result every time. This is why we run 3 test per run then average them to get the final result. Based on the information above, you can see that we are at the limit of the data processing and transfer ability of the Atom processor.

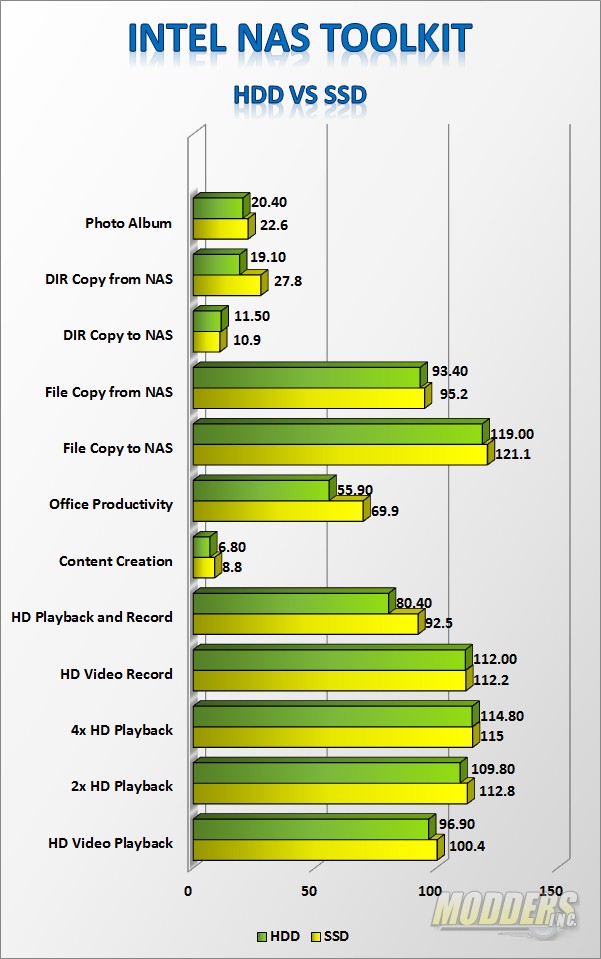

Crystal Disk Mark is a good tool for benchmarking drive performance. Once again we see that all the arrays are very close to each other in terms of performance.

Crystal Disk Mark is a good tool for benchmarking drive performance. Once again we see that all the arrays are very close to each other in terms of performance.

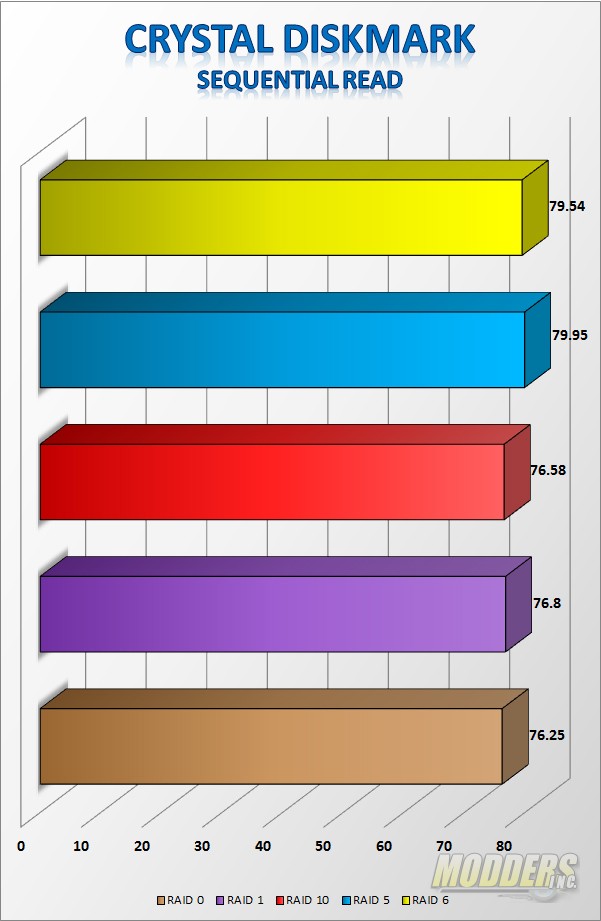

Write speeds are more in line with what I expected with RAID 1 being considerably slower than the others and RAID 10 leading the RAID arrays. JOBD, from my understanding uses a random disk when data is written to or read from, so essentially and especially in this case you would be only using one disk until you hit the 3 tb capacity and at that point you the n5550 would pick the next drive.

Write speeds are more in line with what I expected with RAID 1 being considerably slower than the others and RAID 10 leading the RAID arrays. JOBD, from my understanding uses a random disk when data is written to or read from, so essentially and especially in this case you would be only using one disk until you hit the 3 tb capacity and at that point you the n5550 would pick the next drive.

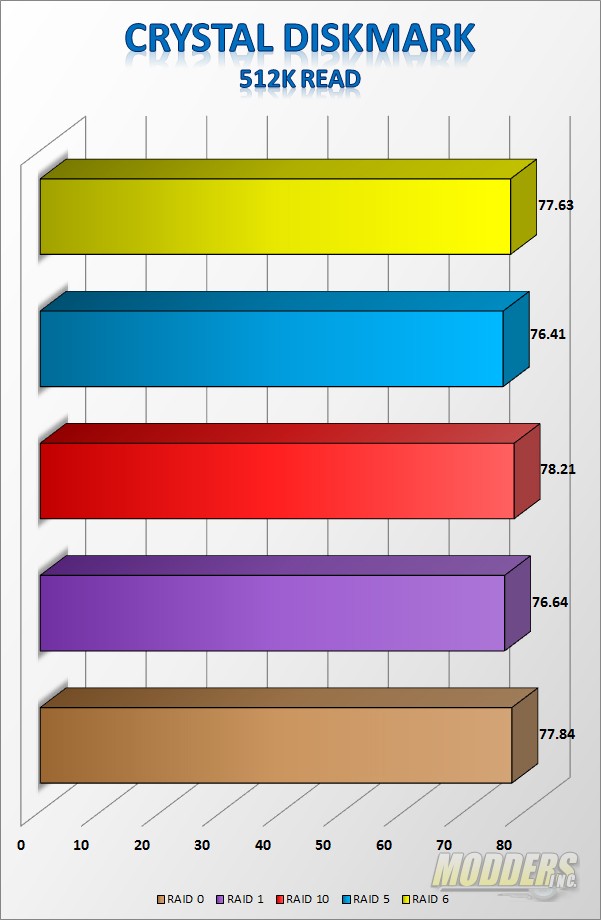

Running the 512k read test shows very little difference in the arrays however RAID 10 eeks out just a little more performance.

Running the 512k read test shows very little difference in the arrays however RAID 10 eeks out just a little more performance.

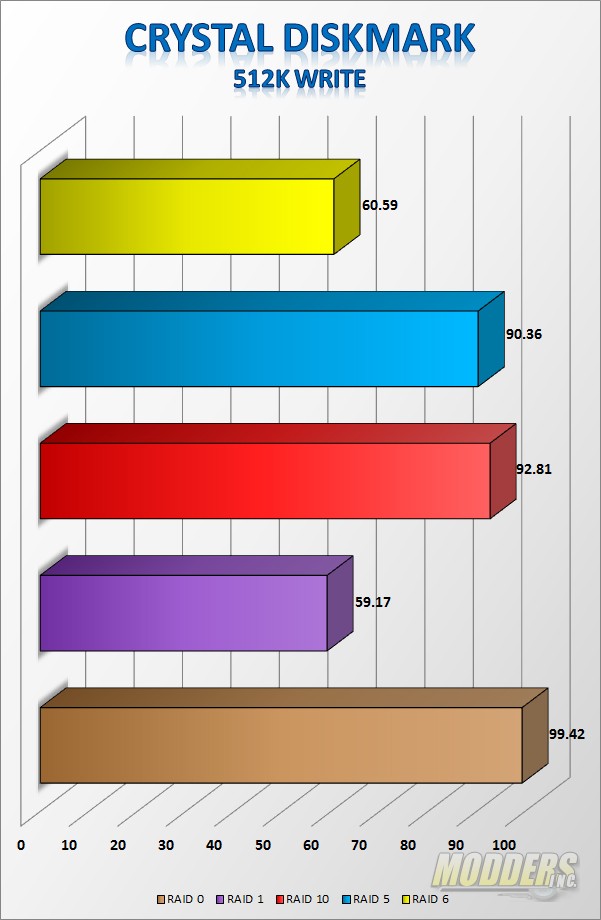

Write speeds are typically hard on RAID arrays and are clearly shown for RAID 1 and RAID 6. RAID 0 comes in at just under 100 mb/s with RAID 10 not far behind.

Write speeds are typically hard on RAID arrays and are clearly shown for RAID 1 and RAID 6. RAID 0 comes in at just under 100 mb/s with RAID 10 not far behind.

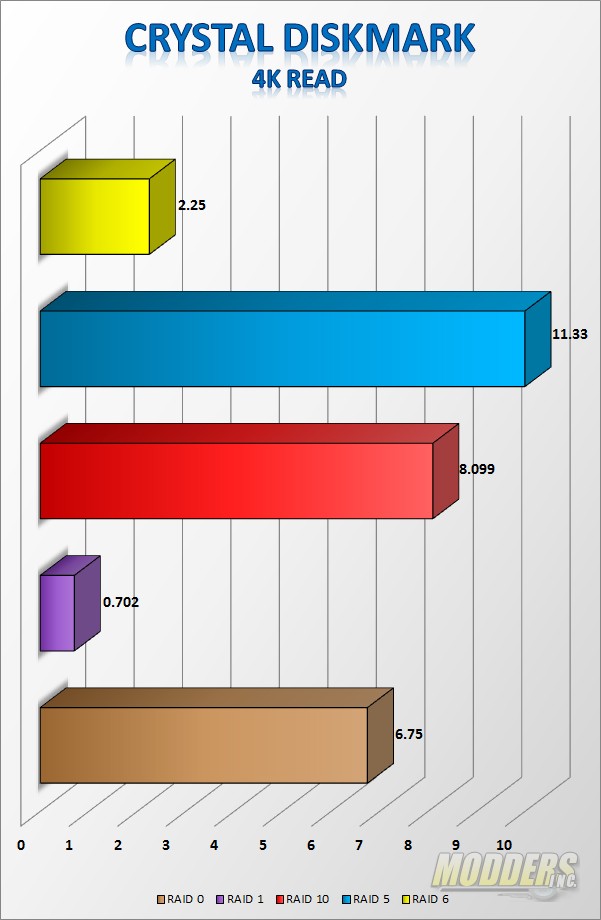

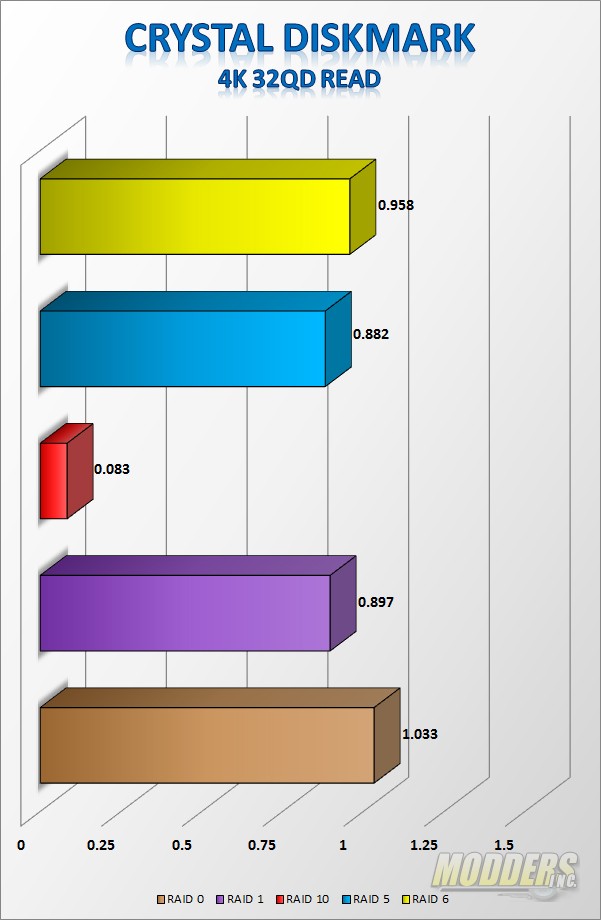

4K file sizes can really hammer drive performance and in the case here RAID 5 is able to lead. RAID 1 takes a massive performance hit as it tries to read from both drives.

4K file sizes can really hammer drive performance and in the case here RAID 5 is able to lead. RAID 1 takes a massive performance hit as it tries to read from both drives.

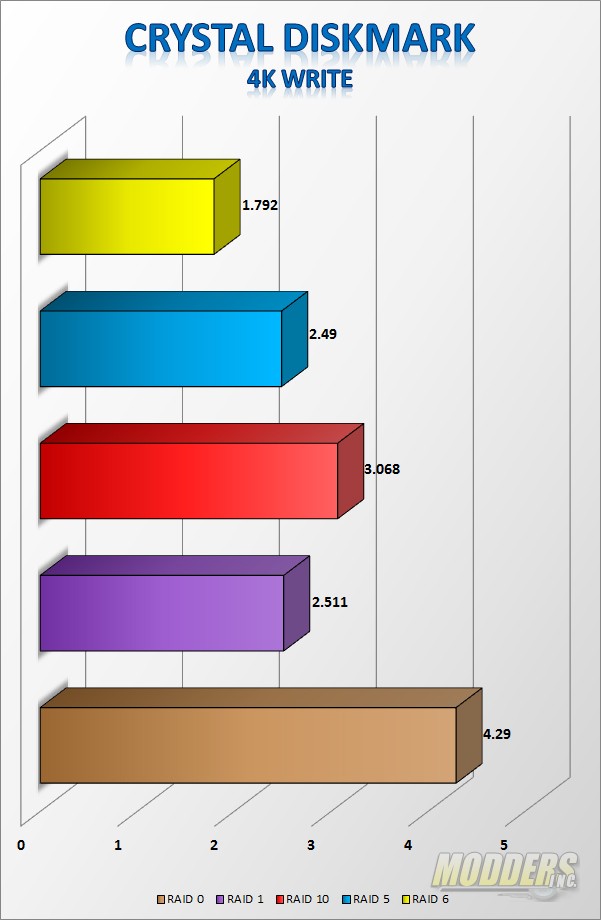

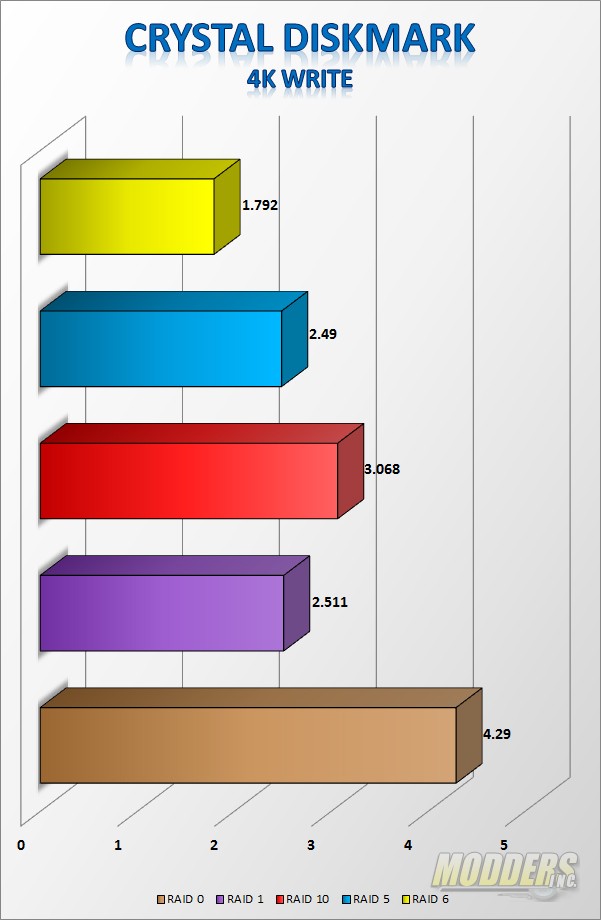

4K writes are a bit different than reads as the performance penalty for parity is visible in both RAID 5 and RAID 6 however more so for RAID 6, RAID 0 continues to pull in the fastest speeds.

4K writes are a bit different than reads as the performance penalty for parity is visible in both RAID 5 and RAID 6 however more so for RAID 6, RAID 0 continues to pull in the fastest speeds.

Adding a queue depth of 32 really hammers the arrays performance.

Adding a queue depth of 32 really hammers the arrays performance.

Write performance is considerably better with deep queues on the arrays.

Write performance is considerably better with deep queues on the arrays.

The last batch of tests were simply shared folders via SAMBA. The other option is to use iSCSI, which essentially takes a “folder” on the RAID array and makes your PC think that it is a local drive. The main difference is there is some caching that happens on a local drive vs. a network drive. Two test were performed and the array was a RAID 0 array using 2 drives. The difference between the two tests was the client was using both ports on the network card.

|

|

| iSCSI No LAG | iSCSI LAG Enabled |

So there are a couple of things going on here. With iSCSI caching takes place on the host and performance is a little better and at the same time, using link aggregation improves performance ever so slightly. However, when it comes to link aggregation, the standard says that packets cannot arrive out of order, this means that packets are not really split up over two different links and most data is transferred over one link while a little bit of different data is transferred over the other. With a single client some very minor balancing takes place. Where link aggregation really shines is when you have multiple clients accessing data. During the last bit of testing, I was able to get very similar results on 3 different systems using single link on the clients and dual links on the N5550. All three clients were able to get a very similar result to the 1st result above.