Thecus N5550 Network Attached Storage Review

Testing

| System Configuration | |||

| Case | Cooler Master Cosmos II SE | ||

| CPU | Intel i7 4770K | ||

| Motherboard | Gigabyte GA-Z77X-UDH3 | ||

| Ram | 2 GB G.Skill F3-12800CL9q DDR3-1600 | ||

| GPU | EVGA GTX 770 OC | ||

| Hard Drives | Samsung 840 EVO 256gb SSD | ||

| Western Digital black 500 gb 7200 RPM HDD | |||

| Power Supply | NXZT Hale v2 1000 Watt power supply | ||

Five Seagate Barricuda 3 TB 7200 RPM drives were installed and used in the NAS. All RAID levels used a 64 KB (default) stripe size. During the RAID 1 test only 2 drives were used.

Since the N5550 supports dual network cards, an Intel dual port server network card was installed.

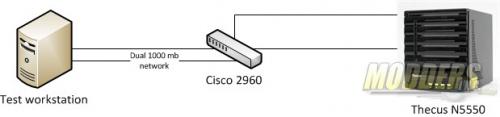

Network Layout

For all tests the N5550 was configured to use the 802.3ad protocol for link aggregation. Two CAT 6 cables were connected to the Cisco 2960 which has also been configured for 802.3ad. Standard testing was set on the work station with only 1 network card active. Additional tests were performed with both ports on the network card in a team mode, using the 802.3ad settings. Interrupt moderation was disabled and jumbo frames was left unconfigured.

Software

To test the Thecus N2560 two applications were used; The Intel NAS Performance toolkit and Crystal Disk Mark.

The Intel NAS Performance toolkit simulates various tasks for storage devices such as video streaming, copying files and folders to and from the NAS as well as creating content directly on the NAS. To limit caching, a 2GB G.Skill memory module was used in all tests. All options in the Performance toolkit were left that the defaults. The NAS performance test is free to download. You can pick up a copy for yourself here.

To run Crystal Disk Mark, a network share was mapped as a drive letter.

iSCSI targets were configured and were configured as a local drive.

All tests were run a total of three times then averaged to get the final result.

RAID Information

|

|

|

|

|

|

| Images courtesy of Wikipedia | ||

JOBD or Just a Bunch Of Disks is exactly what the name describes. The hard drives have no actual raid functionality and are spanned at random data is written at random.

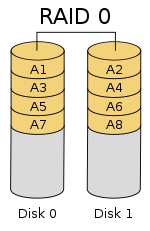

RAID 0 is a stripe set and data is written across the disks evenly. The advantage of RAID 0 is speed and increased capacity. With RAID 0 there is no redundancy and data loss is very possible.

RAID 1 is a mirrored set and data is mirrored from one drive to another. The advantage of RAID 1 is data redundancy as each piece of data is written to both disks. The disadvantage of RAID 1 is write speed is decreased as compared to RAID 0 due to the write operation is performed on both disks. RAID 1 capacity is that of the smallest disk.

RAID 10 combines the 1st two raid levels and is a mirror of a stripe set. This allows for better speed of a RAID 0 array but the data integrity of a RAID 1 array.

RAID 5 is a stripe set with parity. RAID 5 requires at least 3 disks. Data is striped across each disk, and each disk has a parity block. RAID 5 allows the loss of one drive without losing data. The advantage to RAID 5 is read speeds increase as the number of drives increase but the disadvantage is write speeds are slower as the number of drives is increased. There is overhead with RAID 5 as the parity bit needs to be calculated and with software RAID 5 there is more of a performance hit.

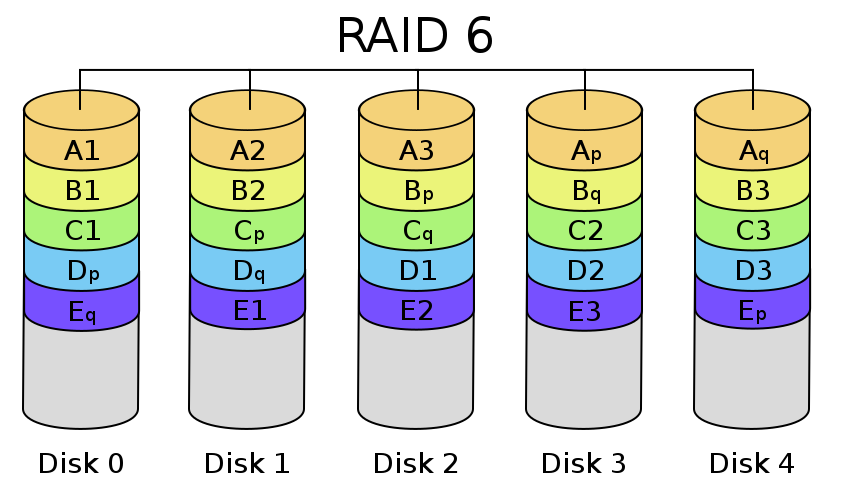

RAID 6 expands on RAID 5 by adding an additional parity block to the array that is distributed across all the disks. Since there are two parity blocks in the array more overhead is used with a RAID 6 array.

For a full breakdown of RAID levels, take a look at the Wikipedia article here.